Before we dive into the technical depths of the Java Stream API, I want to share a personal story from my early days as a computer science student. Like at many universities, especially in the first years, our curriculum was heavily focused on C and C++. In fact, many of our professors believed—and often reminded us—that if you truly mastered C++, you could pick up any other language with ease.

And honestly, they had a point.

Back then, with my limited exposure and young developer mindset, C++ felt like the holy grail. I couldn’t understand why anyone would even need another language. To my naive brain, it had everything you’d ever need: blazing speed, full control, and all the flexibility you could ask for. Consequently, I became a true C++ evangelist and proudly dismissed other languages, throwing shade at anything else. That was, until I met Java’s Stream API.

The first time I encountered a Java stream, I was genuinely surprised and, frankly, a little shocked. The elegance, expressiveness, and conciseness were unlike anything I had seen in C++. For the first time, code felt… beautiful. While I didn’t fully understand how it worked back then, I instantly knew I wanted to learn more.

From that moment, something clicked. I made a personal promise to embrace this new paradigm and learn everything I could about it. On that day, I swore to myself that I’d never write a classic for loop again. Looking back now, years later, I think I’ve kept that promise—I’ve probably written fewer than ten traditional for loops since then. Ultimately, this article is my way of sharing that journey—from the early confusion to the deep appreciation I’ve developed for the Stream API—and helping others see the same beauty I once stumbled upon.

1. Introduction

In this comprehensive guide, we’ll take a deep dive into the Java Stream API, one of the most powerful features introduced in Java 8. It was designed for processing collections in a functional, declarative style. Here, you’ll learn not only how to use the Stream API effectively but also how it works under the hood. Furthermore, we will explore what makes it so performant and why it’s a game-changer for writing cleaner, more expressive, and maintainable code.

Together, we’ll explore the true potential of streams, from basic operations to advanced patterns. We’ll also uncover the elegance and flexibility that make the Stream API a favorite among modern Java developers. Whether you’re new to streams or looking to deepen your understanding, this article will equip you with the knowledge to write smarter, more efficient Java code.

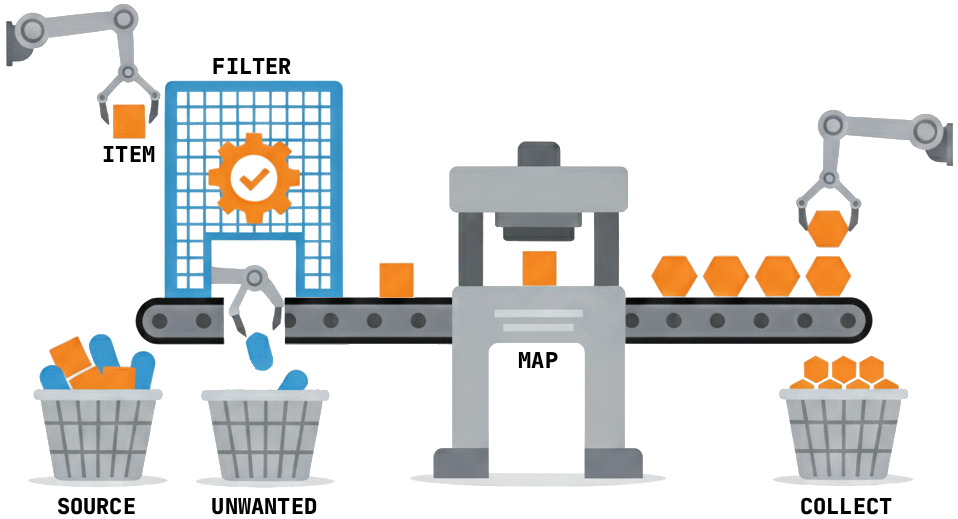

2. What Is a Stream? Hint: It’s a Factory, Not a Warehouse

Right, let’s clear up the biggest misconception first: a Java Stream is definitely not a Collection. Streams are an abstraction.

I know it’s a bit strange to start by explaining what something isn’t (see Ironic process theory), but this is a crucial distinction. A Collection, such as a List or Set, is like a warehouse—it’s a data structure that holds all of its items in memory. You can add items, remove them, and check how many you have.

A Stream, on the other hand, is the factory production line.

Imagine your data elements are raw materials at the start of the line. The stream itself is the set of workstations—the conveyor belt and all the robotic arms—that process each element as it passes through. Each station performs a single, specific task:

- One robot could be a quality checker, inspecting each element and discarding any that don’t meet the standard (a

filter()operation). - Another station might paint or label the element, transforming it into something new (a

map()operation). - Finally, a concluding station could count or package all the finished products (a terminal operation like

count()orcollect()).

The key is that the production line doesn’t store the items; it processes them as they flow through. This is the fundamental difference, and it’s what makes streams so powerful and efficient.

3. The Anatomy of a Stream: The Pipeline

Every efficient factory has a well-designed production line, and Java Streams are no different. They operate as a stream pipeline, which is a sequence of operations that process your data from raw material to finished product. Think of your stream pipeline as having three crucial sections.

3.1. Source (The Supply Bay)

This is where your raw materials—your data elements from a List, Set, or array—are initially stored. The process begins when the first robotic arm picks up an item from this source and places it onto the conveyor belt, initiating the stream.

3.2. Intermediate Operations (The Workstations)

Once on the conveyor belt, items move through a series of specialized workstations. These are your intermediate operations. Each one takes an item, performs a task, and then passes the transformed item along to the next station. Importantly, they are lazy, meaning they don’t start working until a final order is placed.

- The Quality Control Gate (

filter()from our example): This station acts as a checkpoint. Items that meet the quality criteria pass through, while those that don’t are shunted off the main line. - The Press/Transformer (

map()from our example): Next, this station is like a hydraulic press. An item enters, undergoes a transformation (for example, it’s pressed into a new shape), and exits as a new, modified item.

Because these operations can be chained together, they allow the Java runtime to optimize the entire process before it even starts.

3.3. Terminal Operation (Packaging & Shipping)

This is the grand finale. The terminal operation is the instruction that tells the entire production line to start processing. Once an item has passed through all the workstations, it arrives at this final stage. Here, the items are gathered and assembled into the final product.

- The Final Packing Station (

collect()from our example): At the end of the line, a final robotic arm collects all the finished goods and places them into a result container. This signifies the completion of the stream’s work.

After the terminal operation is called, the pipeline is executed from start to finish. The stream is then “consumed” and the process is complete. To process the original items again, you would need to set up a new stream. This modular design makes streams incredibly powerful for writing clear and maintainable code.

4. Goodbye, for Loops: Declarative vs. Imperative

One of the most immediate benefits of adopting streams is the dramatic improvement in code readability. Think about a common task: filtering a collection based on some criteria and then transforming the remaining elements. With a traditional for loop, your core business logic gets buried inside procedural ceremony. You have to manually initialize a list for the results, write the loop boilerplate, nest an if statement for the filter, and then manually add the result to your new list. In short, the what you’re doing is obscured by the how you’re doing it.

4.1. A Simple Example: Sum of Squares

Imagine you’re given a list of integers and you need to find the sum of the squares of only the even numbers.

4.2. External Iterator: The Traditional for Loop Approach

Using a traditional for loop means you have to micromanage every step. The whole iteration needs to be controlled by yourself: what to do, when to do, how to do. You need a place to store your running total, loop through everything, check each number, perform the calculations, etc. The thought process goes something like this:

- Create a variable

sumand set it to zero. - Start a loop to go through each number in the list.

- Inside the loop, check if the number is even.

- If it is, calculate its square.

- Then, add that result to

sum.

This translates into the following code:

final List<Integer> numbers = List.of(1, 2, 3, 4, 5, 6, 7, 8);

int sumOfSquares = 0;

for (final int number : numbers) {

if (number % 2 == 0) {

final int square = number * number;

sumOfSquares += square;

}

}

// You OWE me! My for loop counter went up because of you.Although this code works perfectly fine, you have to read it carefully to understand the goal. The core logic is mixed with the boilerplate of looping and managing state.

4.3. Internal Iterator: The Declarative Stream Approach

Now, let’s rephrase the problem as a set of instructions, like a recipe. This is the stream way of thinking. Our recipe is:

- Start with our list of numbers.

- Keep only the even ones.

- Next, turn each of those into its square.

- Finally, sum them all up.

This recipe translates almost directly into a Java Stream pipeline. Each step becomes a clear, self-contained operation, abstracting the implementation details about how the iteration is happening:

final List<Integer> numbers = List.of(1, 2, 3, 4, 5, 6, 7, 8);

final int sumOfSquares = numbers.stream()

.filter(number -> number % 2 == 0)

.mapToInt(number -> number * number)

.sum();Notice how the stream version reads like a story that follows the problem description. There are no temporary variables to manage and no boilerplate loop code. For a beginner, this can be a much more intuitive way to translate a problem statement into working code, since you’re simply describing the steps of the data transformation.

5. Under the Hood: How Streams Really Work

We’ve seen how streams can replace for loops and make code more readable. But how does Java pull this off so efficiently? The answer lies in some brilliant engineering. Let’s pop the hood and look at the internal machinery.

5.1. The Secret Ingredient: The Spliterator

The heart of every stream is an interface called a Spliterator. If the Stream is our conveyor belt, the Spliterator is the smart robotic forklift that feeds it. A Spliterator has two primary jobs:

- Advancing the Stream (

tryAdvance): For a simple, sequential stream, the Spliterator grabs one item at a time from the source and sends it down the pipeline. It processes one element completely through all stages before touching the next one. - Splitting the Work (

trySplit): This is the genius behind parallel streams. The forklift can look at its remaining workload (e.g., a pallet of 1,000 items) and split it in half. It then hands one half to a new, identical forklift, so they can both work on smaller batches simultaneously. This process is what makes parallel processing possible.

5.2. The Pipeline is a Blueprint, Not the Factory

When you write a stream pipeline, it’s crucial to understand that you are not running any code yet. You are simply designing a blueprint for the production line.

final List<Integer> numbers = List.of(1, 2, 3, 4, 5, 6, 7, 8);

// This code does NOTHING! No filtering, no mapping.

// It's just a plan for what to do later.

final IntStream blueprint = numbers.stream()

.filter(n -> n % 2 == 0)

.mapToInt(n -> n * n);Think of this code as handing over a set of instructions. The items don’t start moving, and the machines don’t turn on until you give the final command—the terminal operation.

To prove this, let’s add some print statements:

final List<Integer> numbers = List.of(1, 2, 3, 4, 5, 6, 7, 8);

final IntStream blueprint = numbers.stream()

.filter(n -> {

System.out.println("Filter checking: " + n);

return n % 2 == 0;

})

.mapToInt(n -> {

System.out.println("Map squaring: " + n);

return n * n;

});When you execute this code, your console remains empty. This is a perfect demonstration of lazy evaluation. Without a terminal operation, the intermediate steps are never actually run.

Now, let’s add a terminal operation like .count() to trigger the execution:

final List<Integer> numbers = List.of(1, 2, 3, 4, 5, 6, 7, 8);

final IntStream blueprint = numbers.stream()

.filter(n -> {

System.out.println("Filter checking: " + n);

return n % 2 == 0;

})

.mapToInt(n -> {

System.out.println("Map squaring: " + n);

return n * n;

});

final long numberOfEvenElements = blueprint.count(); // <--With the addition of .count(), the blueprint is finally put into action. Running this code now will display the print statements, showing that the terminal operation triggers all the preceding lazy steps. A Stream, therefore, is an abstraction over a sequence of operations, not a data structure that stores elements.

5.3. Streams are Non-Mutating

One of the most important guarantees of the Stream API is that it is non-mutating. This means that the stream pipeline never modifies the original data source. When you filter, map, or sort a collection using a stream, you are not changing the original List or Set it came from. The key is that a stream pipeline is designed as a read-only traversal that produces new values, rather than an in-place modification tool.

5.3.1. The Stream as a “View”

When you call .stream() on a collection, you are not creating a copy of the data. You are creating a Stream object, which is essentially a “view” or a “wrapper” around your original collection. It holds two main things:

- A reference to the original data source.

- A sequence of operations to be performed (the blueprint).

Think of it like opening a file in read-only mode. The application can read the file’s contents and use that information to create something new (like a summary document), but it is prevented from writing back to the original file. The stream is a read-only view of your collection.

5.3.2. Streams Are All About Producing New Things

Let’s trace what happens to a single element. Imagine we have a List<User> and we want to get the names of active users.

record User(String name, boolean active) {}

final List<User> userList = List.of(new User("Alice", true), new User("Bob", false));

final List<String> activeNames = userList.stream() // 1. Create the "read-only view"

.filter(User::active) // 2. Filter the users

.map(User::name) // 3. Map the names

.toList(); // 4. Collect into a NEW listHere is the step-by-step process for the first user object, “Alice”:

stream(): AStreamobject is created that points touserList. No data has been copied or read yet.filter(): The stream’s internal machinery (the Spliterator) fetches the first element fromuserList. It gets a copy of the reference pointing to the “Alice” object. Thefilteroperation callsalice.isActive(), which returnstrue. Because the condition passes, the reference to “Alice” is passed to the next stage. Nothing has been changed.map(): Themapoperation receives the reference to the “Alice” object. It then calls the functionuser -> user.getName(). This function executesalice.getName(), which reads thenamefield and returns a newStringobject:"Alice". It does not modify the originalUserobject. Themapoperation’s job is to produce a new value based on the input.collect(): The new string"Alice"is then passed to the collector, which adds it to a brand newArrayListthat is being built internally.

The “Bob” object is fetched next. The filter returns false, so the pipeline stops processing that element immediately. The original userList was only ever read from; it was never written to.

5.3.3. A Crucial Distinction: Collection vs. Element Mutability

This brings us to a critical point. The Stream API guarantees it will not mutate the structure of the source collection (it won’t add or remove elements).

However, the API cannot prevent you from writing code that mutates the state of the objects within the collection, though doing so is a major violation of stream principles and is considered bad practice.

For example, consider this code:

// Using Lombok for generating boilerplate code

@Setter

@NoArgsConstructor

@AllArgsConstructor

class MutableUser {

private boolean active;

}

// This code MUTATES the objects in the original list

list.stream()

.filter(user -> user.isInactive())

.forEach(user -> user.setActive(true)); // DANGEROUS: Modifying source stateWhile this is possible, it defeats the purpose of streams. It creates side effects, makes the code unpredictable, and will cause catastrophic, non-deterministic bugs if you ever try to run it as a parallel stream.

So, the stream pipeline is non-mutating because its operations (filter, map, sorted, etc.) are designed by contract to be pure functions that produce new results, leaving the original source data untouched.

6. The Golden Rule of Streams: Lazy Evaluation

One of the most powerful secrets behind the performance of Java Streams is Lazy Evaluation. Think of it as the factory’s ultimate efficiency strategy: the machines don’t start until the final production order is given.

6.1. Intermediate vs Terminal Operations

- Intermediate Operations (like

filter(),map(),sorted(), etc.): These are the lazy workstations. Calling one of these methods simply adds another step to your factory’s blueprint. They always return a newStreamso you can continue building your pipeline. - Terminal Operations (like

collect(),count(),forEach(), etc.): In contrast, these are the eager “START” buttons. Calling a terminal operation is the signal that your blueprint is complete. It kicks off all the lazy intermediate operations and produces a final result.

6.2. Optimization Through Operation Fusion

Because the pipeline is just a blueprint, the Java runtime can act as a clever factory manager and optimize the layout. One of its best tricks is operation fusion. Instead of running all items through a filter step and storing them in a temporary list before moving to a map step, the runtime fuses these operations.

It builds one integrated workstation that does both jobs at once. For a single item:

- It is checked by the

filterlogic. - If it passes, it is immediately transformed by the

maplogic.

Only after that entire sequence is complete for one item does the Spliterator provide the next one. This is incredibly efficient because no intermediate collections are created. You don’t waste memory on temporary lists, which is why a stream can be more performant than a for loop that creates temporary collections.

6.3. Chaining Operations: Readability Meets Performance

Let’s explore a practical example to see why chaining is so powerful. Imagine we need to implement a business rule: from a list of people, generate formatted website identifiers, but only for adults residing in the “USA”.

This multi-step logic presents a stylistic choice when using a stream. You could write a single, complex filter operation to check both conditions, or you could chain multiple, simpler ones. Let’s compare these two approaches to see how the decision impacts not just readability, but also performance.

6.3.1. First approach—monolithic operations:

final List<Person> personList = List.of(

new Person("John", "Doe", "USA", 30),

new Person("Alice", "Smith", "USA", 25),

new Person("Marie", "Dupont", "France", 28),

new Person("Jack", "Brown", "United Kingdom", 19),

new Person("Jill", "Johnson", "Canada", 11),

new Person("Bob", "Davis", "USA", 15)

);

final List<String> identifiersFromUnitedStates = personList

.stream()

.filter(person -> person.getAge() >= 18 && person.getCountry().equals("USA"))

.map(person -> {

final String fullName = person.getFirstName() + " " + person.getLastName();

return fullName.toLowerCase().replace(" ", "_");

})

.toList();6.3.2. Second approach—small, chained operations:

final List<Person> personList = List.of(

new Person("John", "Doe", "USA", 30),

new Person("Alice", "Smith", "USA", 25),

new Person("Marie", "Dupont", "France", 28),

new Person("Jack", "Brown", "United Kingdom", 19),

new Person("Jill", "Johnson", "Canada", 11),

new Person("Bob", "Davis", "USA", 15)

);

final List<String> identifiersFromUnitedStates = personList

.stream()

.filter(person -> person.getAge() >= 18)

.filter(person -> person.getCountry().equals("USA"))

.map(person -> person.getFirstName() + " " + person.getLastName())

.map(fullName -> fullName.toLowerCase().replace(" ", "_"))

.toList();At first glance, the first approach might seem faster. However, thanks to operation fusion, both approaches perform almost identically. The Java Stream framework is smart enough to fuse the chain of operations in the second approach into a single, efficient pass.

The real difference lies in readability and maintainability. The second approach is often better because each operation has a single responsibility, making the code easier to read, debug, and modify. This frees you to focus on writing expressive code, confident that performance is already handled.

7. Advanced Stream Optimizations

The intelligence of the Stream API doesn’t stop there. The framework uses metadata about the data source to perform even more aggressive optimizations.

7.1. Stream Characteristics: The Spliterator’s “ID Badge“

Our “smart robotic forklift” (the Spliterator) doesn’t just show up for work; it arrives with a detailed specification sheet for the materials it’s carrying. The factory manager (the Java runtime) reads this spec sheet to understand the nature of the incoming data and to plan the most efficient production run possible.

These specifications are called characteristics. Let’s look at what each one tells our factory manager.

7.1.1. Data Shape and Order

These characteristics describe the arrangement of the elements.

ORDERED- What it means: The elements have a defined, repeatable sequence.

- Factory Analogy: The items on the pallet are arranged in a specific numbered order (1, 2, 3…).

- Found in:

List,Array.

DISTINCT- What it means: Every element is unique; there are no duplicates.

- Factory Analogy: Every item on the pallet has a unique serial number.

- Found in:

Set.

SORTED- What it means: The elements are already sorted, either by their natural order or a specific comparator.

- Factory Analogy: The items are pre-arranged on the pallet by size, from smallest to largest.

- Found in:

TreeSet, or the result of a.sorted()operation.

7.1.2. Size and Structure

These characteristics describe the size of the data source and how it behaves when split.

SIZED- What it means: The exact number of elements is known in advance.

- Factory Analogy: The pallet comes with a manifest stating “Contains exactly 1,000 items.”

- Found in: Most collections like

ArrayListandHashSet.

SUBSIZED- What it means: When the

Spliteratoris split (trySplit), the resulting newSpliteratorsare alsoSIZED. - Factory Analogy: When our forklift splits a pallet in half, it also hands over a new manifest for each half with the exact count.

- Found in: Most

SIZEDcollections.

- What it means: When the

7.1.3. Data Integrity and Safety

These characteristics provide guarantees about the data itself and the source collection.

NONNULL- What it means: None of the elements are

null. - Factory Analogy: A quality guarantee: “No empty boxes on this pallet.”

- Found in: Certain specialized collections or after an operation like

.filter(Objects::nonNull).

- What it means: None of the elements are

IMMUTABLE- What it means: The source data cannot be changed structurally (no adding/removing elements) during the stream’s execution.

- Factory Analogy: The original crate of materials is sealed and tamper-proof while the items are on the conveyor belt.

- Found in:

List.of()(Java 9+ immutable collections), arrays.

CONCURRENT- What it means: The source can be safely modified by other threads without causing issues.

- Factory Analogy: The source material bin can be refilled by another worker while our forklift is taking items from it.

- Found in: Concurrent collections like

ConcurrentHashMap.

7.1.4. Automatic Stream Optimizations

This isn’t just academic; these characteristics directly enable powerful, automatic optimizations. When you build your stream pipeline, the framework checks these “badges” and can decide to skip entire operations:

- If you call

.distinct()on a stream that comes from aHashSet(which is alreadyDISTINCT), the stream framework is smart enough to do nothing. The operation is essentially free. - If you call

.sorted()on a stream that comes from aTreeSet(which is alreadySORTED), the framework can skip the entire sorting process if the comparator is the same. - If you call

.toArray()on aSIZEDstream, the framework knows the exact size of the array to create, avoiding inefficient resizing operations.

This metadata-driven approach is a key reason why streams are not just readable, but also highly performant, as they can adapt their execution strategy based on the known properties of the source data.

7.2. Short-Circuiting: The Emergency Stop Button

Imagine you have a production run of a million items, but your order is simple: “Find the first item that is painted red.”

A naive factory manager would process all one million items, collect all the red ones, and then hand you the first from that list. An intelligent manager, however, would install an emergency stop button. The moment the first red item is spotted, the manager hits the button, the entire conveyor belt screeches to a halt, and the item is presented to you immediately.

This is short-circuiting. It’s the ability of a stream pipeline to stop processing elements as soon as the desired result is found.

Some operations are designed to be short-circuiting:

- Intermediate Operations:

limit(n): This is a classic short-circuiting operation. It tells the pipeline, “I only need the firstnitems that make it this far.” After then-th item is found, the stream can stop requesting elements from the source.

- Terminal Operations:

findFirst()/findAny(): These stop as soon as they find one element that matches the preceding filter conditions.anyMatch(predicate): Returnstrueand stops the moment it finds any element that satisfies the predicate.allMatch(predicate): Can short-circuit. Returnsfalseand stops the moment it finds any element that doesn’t satisfy the predicate.noneMatch(predicate): Can short-circuit. Returnsfalseand stops the moment it finds any element that does satisfy the predicate.

7.2.1. The Stream Approach: Declaring Your Goal

Short-circuiting is a massive performance optimization. It allows you to work with potentially massive—or even infinite—streams efficiently. For example, the code below will find the first even number greater than 10 and return almost instantly, without processing the entire list:

final Optional<Integer> firstMatch = largeListOfNumbers.stream()

.filter(n -> n > 10)

.filter(n -> n % 2 == 0)

.findFirst(); // Stops as soon as the first match is foundWithout short-circuiting, this simple query on a large dataset would be needlessly slow. It’s another example of how the Stream API is designed not just for readability, but for intelligent, efficient execution. This code is concise and its intent is unmistakable. The short-circuiting behavior isn’t something you have to implement; it’s a built-in feature of the operation you chose.

7.2.2. The for Loop Equivalent: A Manual Implementation

To achieve the exact same result with a for loop, you have to manually implement the entire search-and-stop procedure. This involves more “ceremony” and requires you to manage the state yourself.

// Manually implement the search, stop, and result handling

Integer foundNumber = null;

for (final int n : largeListOfNumbers) {

if (n > 10 && n % 2 == 0) {

foundNumber = n;

break; // CRUCIAL: Manually stop the loop

}

}This example perfectly illustrates that the benefit of streams isn’t just about writing less code. It’s about writing smarter, safer code that more clearly communicates its purpose.

7.3. Infinite Streams: Streams Without End

What if you could hook your production line to a magic generator that produces items forever? This is the concept behind infinite streams. They are created with static methods like Stream.generate() and Stream.iterate().

Stream.generate(Supplier<T>): This is a cloning machine. It takes a supplier and calls it repeatedly to produce a stream of independent elements, like random numbers.

final Stream<Double> randomNumbers = Stream.generate(Math::random);Stream.iterate(seed, UnaryOperator<T>): This is a sequential assembler. It starts with aseedvalue and then applies a function to the previous element to create the next one. This is perfect for sequences like even numbers.

final Stream<Integer> evenNumbers = Stream.iterate(0, n -> n + 2);The key difference is that Stream.iterate()Stream.generate()

The golden rule here is that you must use a short-circuiting operation like limit() to terminate an infinite stream. Otherwise, your program will run forever.

8. Unleash the Power: A Look at Parallel Streams

So far, we’ve designed an incredibly efficient single production line. But what if your factory has more than one CPU core? What if you could instantly set up multiple identical production lines, split your raw materials among them, and process everything simultaneously?

That’s exactly what parallel streams do. With a single command, you can instruct the Stream API to automatically partition your data, process it concurrently using multiple threads, and then combine the results. This can lead to massive performance gains, especially on modern multi-core processors.

8.1. The Simplicity of Going Parallel

One of the most beautiful aspects of the Stream API is how astonishingly simple it is to switch from a sequential to a parallel stream. You don’t need to write complex multithreading code—you just change one word.

// Our familiar sequential stream

List<String> sequentialResult = userList.stream()

.filter(User::isActive)

.map(User::getName)

.collect(Collectors.toList());

// The "high-performance" parallel version

List<String> parallelResult = userList.parallelStream() // That's it!

.filter(User::isActive)

.map(User::getName)

.collect(Collectors.toList());This incredible ease of use is a direct payoff for adhering to a functional style, especially the principle of statelessness. Because operations like filter and map are self-contained, the Java runtime can safely partition the work across multiple cores without any risk of interference.

By calling .parallelStream() instead of .stream(), you’ve told Java to execute the pipeline concurrently. All the intermediate and terminal operations remain exactly the same, but the underlying execution engine transforms into a powerful, parallel powerhouse. This is the true elegance of the functional style: it frees us, the developers, from the complex mechanics of concurrency and allows us to focus on our logic, while the runtime handles the complex task of parallelization.

8.1.1. How It Works: The Spliterator’s Moment to Shine

This is where our friend the Spliterator and its trySplit() method become the star of the show. When you call .parallelStream(), the framework repeatedly calls trySplit() on the source data.

Think of it like our smart forklift looking at its pallet of 1,000,000 items and splitting it in half, giving 500,000 to a new forklift. They might split their workloads again, creating a team of forklifts, each with a smaller chunk of data. Each forklift then feeds its chunk to its own production line (a thread running on a CPU core). Once all the partial results are ready, the framework intelligently combines them into the final result.

8.2. Caveats of Parallel Streams

Parallelism isn’t a silver bullet. Firing up extra production lines and coordinating the work comes with an overhead cost and some associated risks that need to be know and properly managed.

“With great power comes great responsibility”

8.2.1. Understand the Data

- The Overhead Cost: For small datasets or extremely simple operations, the time it takes to split the data, manage the threads, and combine the results can be more than the time saved. In these cases, a simple sequential stream is often faster.

- The Stateless Rule is Law: Your stream operations must be stateless. Imagine workers on different assembly lines all trying to press the same counter button. The final count would be chaotic and wrong. Each operation must be self-contained and not modify a shared state. If your lambdas modify a shared variable, a parallel stream will lead to unpredictable and buggy results.

- Order is Not Guaranteed: In a parallel stream, chunks of data are processed concurrently. The element that was first in the original list might not be the first one to finish processing. If the order of execution matters, you must either use a sequential stream or specific operations like

forEachOrdered.

Use parallel streams for large datasets where the processing for each element is non-trivial and independent. For small collections or simple tasks (like getting a name), stick with sequential streams to avoid unnecessary overhead.

8.2.2. Understand the Workload

While parallel streams seem like magic, they are a specialized tool. Using the wrong tool for the job can make your application’s performance dramatically worse. The most critical rule to understand is this: Parallel streams are designed for CPU-bound tasks, not I/O-bound tasks.

8.2.2.1. CPU-Bound: The Ideal Scenario

A CPU-bound task is one where the limiting factor is the speed of your processor. The CPU is doing intense work like complex mathematical calculations, data transformations, or in-memory sorting.

- Factory Analogy: This is like a task that requires a lot of intricate assembly work for each item. The worker is constantly busy thinking and manipulating the item. Adding more assembly lines (CPU cores) with more workers directly speeds up the total production because the work itself is the bottleneck.

This is where parallel streams shine. They are optimized to take a big computational problem, split it across your CPU cores, and solve it faster.

Imagine you need to perform a computationally expensive operation on every number in a large list. This is a classic CPU-bound task. For this example, our “complex calculation” will be a loop that simulates heavy processing:

public class CpuBoundTask {

public static double performComplexCalculation(final int number) {

System.out.println("Processing " + number + " on thread: " + Thread.currentThread().getName());

// Burn CPU cycles and simulate work

return IntStream.range(0, 5_000_000)

.mapToDouble(index -> Math.sin(number) * Math.tan(index))

.sum();

}

}Then, run this heavy processing task using a parallel stream:

final List<Integer> numbers = IntStream.rangeClosed(1, 20).boxed().toList();

System.out.println("Starting CPU-bound task with parallel stream...");

final long startTime = System.currentTimeMillis();

// Use a parallel stream to distribute the CPU work across multiple cores

final List<Double> results = numbers.parallelStream()

.map(CpuBoundTask::performComplexCalculation)

.toList();

final long duration = System.currentTimeMillis() - startTime;

System.out.println("Finished in " + duration + "ms.");

System.out.println("Results: " + results);The output will look something like:

Starting CPU-bound task with parallel stream...

Processing 12 on thread: ForkJoinPool.commonPool-worker-9

Processing 5 on thread: ForkJoinPool.commonPool-worker-3

Processing 7 on thread: ForkJoinPool.commonPool-worker-6

Processing 17 on thread: ForkJoinPool.commonPool-worker-5

Processing 15 on thread: ForkJoinPool.commonPool-worker-2

Processing 1 on thread: ForkJoinPool.commonPool-worker-8

Processing 16 on thread: ForkJoinPool.commonPool-worker-7

Processing 10 on thread: ForkJoinPool.commonPool-worker-1

Processing 20 on thread: main

Processing 2 on thread: ForkJoinPool.commonPool-worker-4

Processing 3 on thread: ForkJoinPool.commonPool-worker-4

Processing 9 on thread: ForkJoinPool.commonPool-worker-1

Processing 11 on thread: ForkJoinPool.commonPool-worker-8

Processing 6 on thread: ForkJoinPool.commonPool-worker-6

Processing 18 on thread: ForkJoinPool.commonPool-worker-7

Processing 19 on thread: main

Processing 14 on thread: ForkJoinPool.commonPool-worker-2

Processing 4 on thread: ForkJoinPool.commonPool-worker-3

Processing 13 on thread: ForkJoinPool.commonPool-worker-5

Processing 8 on thread: ForkJoinPool.commonPool-worker-9

Finished in 1109ms.

Results: [630089.9896782864, 680878.1486552204, 105670.07778900526, -566690.5752738896, -718038.5268574554, -209225.16825260132, 491948.845152396, 740827.3590626172, 308592.61555107665, -407360.755550363, -748788.5266391742, -401783.5795511421, 314619.3376562991, 741762.6867639524, 486932.842474722, -215580.81157991322, -719890.4616698261, -562336.14124546, 112227.43409399812, 683609.6240907633]

8.2.2.1.1 How the Common Pool Works: The “Work-Stealing” Strategy

Let’s discuss a bit based on the output that we are seeing above. By default, every parallel stream will use the common pool for executing its tasks. It’s a shared, static ForkJoinPool instance available across your entire application. Its primary job is to efficiently run CPU-intensive tasks using a “work-stealing” algorithm. The common pool is an implementation of the Fork-Join framework, which is based on a divide-and-conquer principle. The real magic is in its work-stealing strategy, designed to keep all CPU cores as busy as possible.

Here’s the process:

- Task Queues: Each worker thread in the pool has its own double-ended queue (a deque) where it stores tasks.

- LIFO Order: A thread processes tasks from the head of its own queue in a “Last-In, First-Out” (LIFO) order. This is efficient because the task it just added is the most likely to still be in the CPU’s cache.

- Work-Stealing: When a worker thread finishes all the tasks in its own queue, it doesn’t just sit idle. It looks at the queues of other threads and “steals” a task from the tail of their queue.

- FIFO Stealing: It steals in a “First-In, First-Out” (FIFO) order. By taking the oldest task from another thread, it reduces the chance of interfering with what that thread is actively working on and helps complete the largest chunks of the overall job.

This work-stealing mechanism ensures that no thread is idle as long as there is work to be done anywhere in the pool. It’s a highly efficient system for tasks that can be broken down into smaller pieces, like processing elements in a parallel stream.

8.2.2.1.2. How the JVM Decides Common’s Pool Capacity

The JVM determines the capacity (or parallelism level) of the common pool with a very simple and logical rule.

By default, the number of threads in the common pool is set to one less than the total number of available processor cores reported by the JVM.

The formula is: Runtime.getRuntime().availableProcessors() - 1

8.2.2.1.2.1. Why “Minus One”?

The logic behind this is that the thread that submits the task to the pool (e.g., the application’s main thread) will also participate in the work. This design prevents the system from creating more threads than cores, which would lead to performance degradation from excessive context switching. By having cores - 1 worker threads plus the submitting thread, you get a total of cores threads actively working, perfectly matching the hardware. That’s also the reason for seeing Processing X on thread: main on the above logs sometimes.

You can check the capacity on your machine with this code:

System.out.println("Common Pool Parallelism: " + ForkJoinPool.commonPool().getParallelism());It’s also possible to override this default by setting a JVM system property at startup, though this is generally only needed for advanced performance tuning:

-Djava.util.concurrent.ForkJoinPool.common.parallelism=N8.2.2.2. I/O-Bound: The Dangerous Trap

An I/O-bound (Input/Output) task is one where the limiting factor is waiting for an external resource. This could be a network call to a REST API, a database query, or reading a file from a slow disk. The CPU is not busy; it’s mostly idle, just waiting for a response.

- Factory Analogy: This is like a task where each worker must make a phone call to an external supplier and wait on hold for an answer. The worker isn’t busy; they are blocked. Their assembly line is idle while they wait.

This is where using a parallel stream is dangerous. Here’s why:

Parallel streams, by default, use a shared, application-wide thread pool called the ForkJoinPool.commonPool(). This pool is sized based on your number of CPU cores (e.g., a machine with 8 cores might have a pool of 7 threads).

If you give a parallel stream an I/O-bound task (like calling a slow API for each item), all the threads in this common pool will quickly make their calls and get blocked waiting for responses.

The result is thread pool starvation. You’ve filled up every available worker thread in the shared pool with tasks that are just waiting. No other part of your application that relies on this common pool (including other parallel streams) can run until those I/O calls complete. You’ve effectively crippled your application’s concurrency.

Now, let’s look at an I/O-bound task. Imagine we need to call a remote API for a list of user IDs to fetch their data. We’ll simulate the network latency with Thread.sleep():

public class IoBoundTask {

public static String performBlockingOperation(final int id) {

System.out.println("Fetching data for " + id + " on thread: " + Thread.currentThread().getName());

try {

// Simulate a 1-second network delay

Thread.sleep(1000);

} catch (final InterruptedException ignored) {

Thread.currentThread().interrupt();

}

return "Data for ID " + id;

}

}

8.2.2.2.1. The Dangerous Trap: Using a Parallel Stream

First, let’s see what not to do. A developer might naively reach for a parallel stream to “speed up” the API calls.

final List<Integer> ids = IntStream.rangeClosed(1, 60).boxed().toList();

System.out.println("Starting I/O-bound task with parallel stream...");

final long startTime = System.currentTimeMillis();

// This will quickly block all threads in the common ForkJoinPool

final List<String> results = ids.parallelStream()

.map(IoBoundTask::performBlockingOperation)

.toList();

final long duration = System.currentTimeMillis() - startTime;

System.out.println("Finished in " + duration + "ms.");If your machine has 8 cores, the common pool has 7 worker threads. It can only run 7 of these 1-second tasks at a time. To process all 60 tasks, it will need to work through roughly 9 batches (60 tasks / 7 threads ≈ 8.57). The total time will be around 9 seconds, during which the shared common pool is completely useless for any other part of your application. The problem gets linearly worse as you add more tasks.

8.2.2.2.2. A Sidestep, Not a Solution: What About a Custom ForkJoinPool?

A developer familiar with concurrency might see the common pool getting starved and think, “I know! I’ll just run my parallel stream on a custom ForkJoinPool to isolate it!”. If you really want to use a parallel stream for blocking I/O, at least do it in a separate, custom ForkJoinPool. By doing so, the rest of the parallel streams of your app won’t be affected once the pool gets cluttered by the blocking tasks.

While this is a valid technique for isolating CPU-bound tasks, it’s the wrong solution for this I/O-bound problem. It doesn’t fix the underlying issue; it just moves the problem to a different thread pool.

final List<Integer> ids = IntStream.rangeClosed(1, 60).boxed().toList();

final ForkJoinPool customIoPool = new ForkJoinPool(50);

System.out.println("Starting I/O-bound task with parallel stream...");

try {

customIoPool.submit(() ->

ids.parallelStream()

.map(IoBoundTask::performBlockingOperation)

.toList()

).get();

} finally {

customIoPool.shutdown();

}Here’s why this is still an anti-pattern for I/O:

- Inefficient Thread Use:

ForkJoinPoolis optimized for short-lived, CPU-intensive “forkable” tasks. Its work-stealing algorithm isn’t designed for threads that will be blocked for long periods waiting for network responses. The threads in your new pool will still be consumed and sit idle. - It’s Still the Wrong Tool: You’re using a highly specialized tool (a Formula 1 car) for a job it wasn’t built for (off-roading). While you may have moved it to a private track, it’s still going to perform poorly.

Isolating the task prevents it from starving the common pool, which is a minor improvement, but it’s fundamentally an inefficient and inappropriate use of a ForkJoinPool. To handle blocking I/O correctly, we need a different tool altogether.

8.2.2.3.The Right Tool for I/O Tasks

For I/O-bound concurrent work, parallel streams are the wrong tool. The modern Java solution is to use CompletableFuture with a custom ExecutorService.

This approach allows you to create a separate thread pool specifically for your blocking I/O tasks. You can size this pool appropriately (often larger than the number of cores, since the threads will mostly be waiting) and isolate this work, preventing it from starving the rest of your application.

final List<Integer> ids = IntStream.rangeClosed(1, 100).boxed().toList();

final ExecutorService ioExecutor = Executors.newFixedThreadPool(50);

System.out.println("Starting I/O-bound task...");

try {

final long startTime = System.currentTimeMillis();

final List<CompletableFuture<String>> futures = ids.stream()

.map(id -> CompletableFuture.supplyAsync(() -> IoBoundTask.performBlockingOperation(id), ioExecutor))

.toList();

final List<String> results = futures.stream()

.map(CompletableFuture::join)

.toList();

final long duration = System.currentTimeMillis() - startTime;

System.out.println("Finished in " + duration + "ms.");

} finally {

ioExecutor.shutdown();

}Even with 100 tasks, our dedicated ioExecutor with a capacity of 50 threads can handle the load gracefully. It will run the first 50 tasks concurrently in the first second, and the next 50 tasks concurrently in the second second. The entire operation will complete in just over 2 seconds (100 tasks / 50 threads = 2 batches).

This powerfully illustrates why isolating I/O-bound work on a properly-sized, separate thread pool is the correct and scalable solution.

8.2.2.4. How to Choose the Right Concurrency Model

Navigating Java concurrency involves asking two critical questions: “Which tool should I use?” and “How many threads should I allocate?” The answer to both depends almost entirely on one factor: whether your workload is CPU-bound (computation-heavy) or I/O-bound (waits for network or disk).

This distinction is the key to performance tuning. It dictates whether you need a small thread pool matching your CPU cores or a much larger one to handle waiting tasks. To simplify this, here is a practical cheatsheet to guide you in choosing the right concurrency model and thread pool size for your specific needs.

8.2.2.4.1. CPU vs I/O Bound, A Simple Rule to Remember

- CPU-Bound Work? → Use a Parallel Stream.

- I/O-Bound Work? → Use

CompletableFuture.

8.2.2.4.2. Sizing Your Thread Pool: A Quick Guide

For CPU-Bound Tasks: The ideal pool size is typically the number of available CPU cores. If you have 8 cores, you want about 8 threads. Any more than that and you gain nothing; the CPU is already at 100% capacity, and the system wastes time with context switching between the extra threads.

For I/O-Bound Tasks: The threads spend most of their time waiting for a response from a network or disk. They are not using the CPU. Because the threads are idle, you can have many more threads than you have CPU cores. The goal is to have enough threads to keep the I/O channel (e.g., the network connection to an API) saturated. A well-known formula, derived from Little’s Law, is:

Pool Size = Number of CPU Cores * (1 + Wait Time / Service Time)

Service Time = The time the thread is actively using the CPU.

Wait Time: = The time the thread is blocked waiting for I/O.

8.2.3. Understand the Execution

The concept of lazy evaluation reveals another interesting behavior when mixing parallel and sequential operations. Take a look at the following code:

final List<String> activeList = userList.parallelStream() // Start in parallel mode

.filter(User::isActive)

.sequential() // Switch to sequential mode

.map(User::getName)

.collect(Collectors.toList());At first glance, this looks like a clever optimization: perform the filtering in parallel to use all your CPU cores, and then switch to a safe, sequential mode for the final mapping step.

However, this is not what happens. Because of lazy evaluation, the stream pipeline is just a blueprint. The calls to .parallel() and .sequential() aren’t workstations; they are like a single toggle switch on the factory’s main power console. When you call the terminal operation, the factory manager simply looks at the final position of that switch to decide how to run the entire production line.

Since .sequential() was the last one called in the chain, it flips the switch to “sequential,” and the entire pipeline runs on a single thread. The initial .parallelStream() instruction is completely overridden.

The rule is simple: the last call to either .parallel() or .sequential() in a stream chain determines the execution mode for the entire pipeline. For example, the opposite is also true—this entire stream would run in parallel:

// This ENTIRE stream runs in PARALLEL

final List<String> activeList = userList.stream() // Starts sequential

.filter(User::isActive)

.parallel() // The final switch is to parallel!

.map(User::getName)

.toList();Treat these methods not as steps in your process, but as configuration settings for the entire operation to avoid performance surprises and truly master the stream execution model.

9. Conclusion: Your Journey to Mastering the Java Stream API

We’ve traveled a long way from the rigid world of C++ and traditional for loops to the elegant, expressive power of the Java Stream API. This journey has taken us deep into what makes Java Streams so revolutionary: they aren’t just collections, but highly efficient, lazy-evaluated data processing pipelines. By understanding core concepts like lazy evaluation, operation fusion, and short-circuiting, you’re now equipped to write code that is not only cleaner but also surprisingly performant.

The key takeaway is that mastering the Java Stream API is about more than just learning new syntax—it’s about embracing a declarative programming mindset. You’ve seen how to build readable data transformations, harness the automatic power of parallel streams for CPU-bound tasks, and avoid common pitfalls. The Stream API empowers you to focus on the what of your logic, trusting the JVM to handle the how of optimization.

Now, I challenge you to keep the promise I made to myself years ago. The next time you’re about to write a for loop, pause and ask: “Can this be a stream?” Start building those pipelines and discover the beauty and efficiency of modern Java for yourself.

Have a favorite Stream trick or a question about a complex pipeline? Share it in the comments below—let’s master the Java Stream API together!